Lab 3: Likelihood!

NRES 746

Fall 2025

Maximum likelihood and optimization

These next couple weeks are focused on estimating model parameters and confidence intervals using maximum likelihood methods.

Likelihood represents the probability of drawing your particular data set given a fully specified model (e.g., a regression model with a particular vector of coefficients, \(\theta\)):

\[L(\theta) = P(data | \theta)\]

This lab is designed to take two lab sessions to complete.

As with all labs in this course, your answers will either take the form of R functions (submitted as part of an R script) or short written responses submitted via WebCampus.

Please complete all assignments by the due date listed in WebCampus. You can work in groups but please submit the materials individually.

When writing functions, it’s often easiest to start off by writing a script, and then when you have everything working properly, wrap the script into a function.

Don’t forget to test your functions!

And remember that your final submission should only contain your functions- not any ancillary code you may have used to develop and test your functions.

Please comment your code thoroughly. Don’t be afraid of for loops and inefficient coding practices, as long as it gets the job done!

Example: reed frog predation data

Load the reed frog predation data from the Bolker book- it can be found here or loaded from the ‘emdbook’ package (“ReedfrogPred”). Save this file to your working directory.

This dataset represents predation data for Hyperolius spinigularis (Vonesh and Bolker 2005). You can read more about this data set in the Bolker book.

# Reed frog example ----------------

rfp <- emdbook::ReedfrogPred # load the data using the 'emdbook' package:

head(rfp)## density pred size surv propsurv

## 1 10 no big 9 0.9

## 2 10 no big 10 1.0

## 3 10 no big 7 0.7

## 4 10 no big 10 1.0

## 5 10 no small 9 0.9

## 6 10 no small 9 0.9Because predation on tadpoles is size and density-dependent, we will subset these data to a single size class (‘small’) and density (10) for all treatments including a predator (this simplifies the problem!). Subset your data now:

# Take a subset of the data

rfp_sub <- subset(rfp, (rfp$pred=='pred')&(rfp$size=="small")&(rfp$density==10))

rfp_sub## density pred size surv propsurv

## 13 10 pred small 7 0.7

## 14 10 pred small 5 0.5

## 15 10 pred small 9 0.9

## 16 10 pred small 9 0.9For each individual, the per-trial probability of being eaten by a predator is a binomial process (i.e., they can survive or die during the interval). Recall that the likelihood that k out of N individuals are eaten as a function of the per capita predation probability p is:

\[Prob(k|p,N) = \binom{N}{k}p^{k}(1-p)^{N-k}\]

Since the observations are independent, the joint likelihood of the whole data set is the product of the likelihood of each individual observation. So, if we have n observations, each with the same total number of tadpoles N, and the number of tadpoles killed in the ith observation is ki, then the likelihood is:

\[\mathcal{L} = \prod_{i=1}^{n}\binom{N}{k_{i}}p^{k_{i}}(1-p)^{N-k_{i}}\]

Here we assume the data are binomially distributed – the binomial distribution is the natural choice for data that are represented as k ‘successes’ out of N ’trials. We conventionally work in terms of the log-likelihood (\(\ell\)), which is:

\[\ell = \sum_{i=1}^{n}\left [log\binom{N}{k_i}+k_{i}log(p)+(N-k_{i})log(1-p) \right ]\]

In R we can represent the data likelihood as follows:

rfp_sub$killed <- with(rfp_sub, density-surv) # make column for number killed in each replicate trial

with(rfp_sub, sum(dbinom(killed, 10, prob=0.5, log=TRUE)) ) # expression of data likelihood(log scale)## [1] -12.8038There is only one parameter (“unknown”) in this calculation: p.

We know how many individuals we started with (N = 10 for each trial) and how many were killed/eaten in each trial (k = 3, 5, 1, and 1). So we want to solve for the most likely value of p given our observations of N and the number of tadpoles eaten.

In essence we do this by picking a possible value of p (which can only range from 0 to 1), calculating the log-likelihood using the equation above, picking another value of p, completing the equation, etc. until we exhaust all possible values of p and identify the one having the highest log-likelihood.

Of course R has useful built in functions to help us optimize the likelihood function!

The “dbinom()” function calculates the likelihood for a binomially distributed response variable.

Here, the response variable is the number of tadpoles eaten, and we are modeling the probability \(p\) of being eaten by a predator!

Given our observed k (number killed), and N = 10 for each trial, what is the likelihood of our data given that that p is 0.5?

L = dbinom(rfp_sub$killed,size=10,prob=0.5) # evaluate data likelihood with p=0.5

L## [1] 0.117187500 0.246093750 0.009765625 0.009765625What is the likelihood of observing all 4 of our outcomes, assuming independent replicates?

prod(L) # joint data likelihood## [1] 2.750312e-06The joint likelihood value gets smaller and smaller each time we add more data (can you see why?), and can become very tiny very quickly. This is one of the reasons we prefer to work with log-likelihoods (which yield larger numbers and have very nice mathematical properties).

Taking the log of a value between 0 and 1 (like a probability mass) yields a negative number, which is why we often see that our log likelihood values are negative.

We can build on these ideas to estimate the likelihood over the entire possible parameter space (probability of being eaten- which can range from 0 to 1).

First we generate a sequence of plausible values for our free parameter, \(p_{eaten}\).

p_seq <- seq(0.01, 1, length=100) # prepare for visualizing the likelihood across parameter spaceFor every value of p we will calculate the binomial probability of the data.

Lik <- sapply(p_seq, function(t) prod(dbinom(rfp_sub$killed,10,prob=t)) )

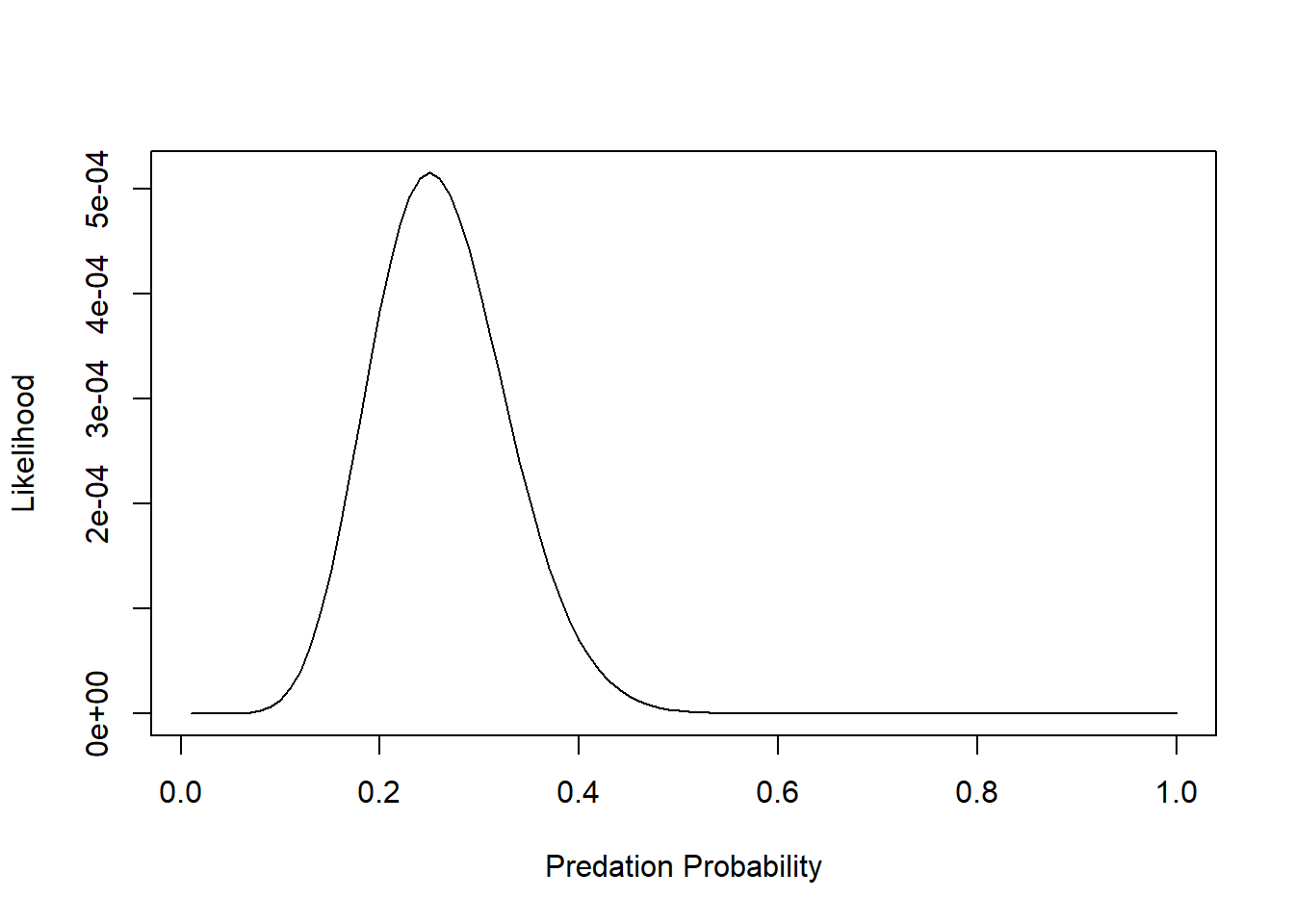

plot(Lik~p_seq,lty="solid",type="l", xlab="Predation Probability", ylab="Likelihood") Q: is this a probability distribution?

Q: is this a probability distribution?

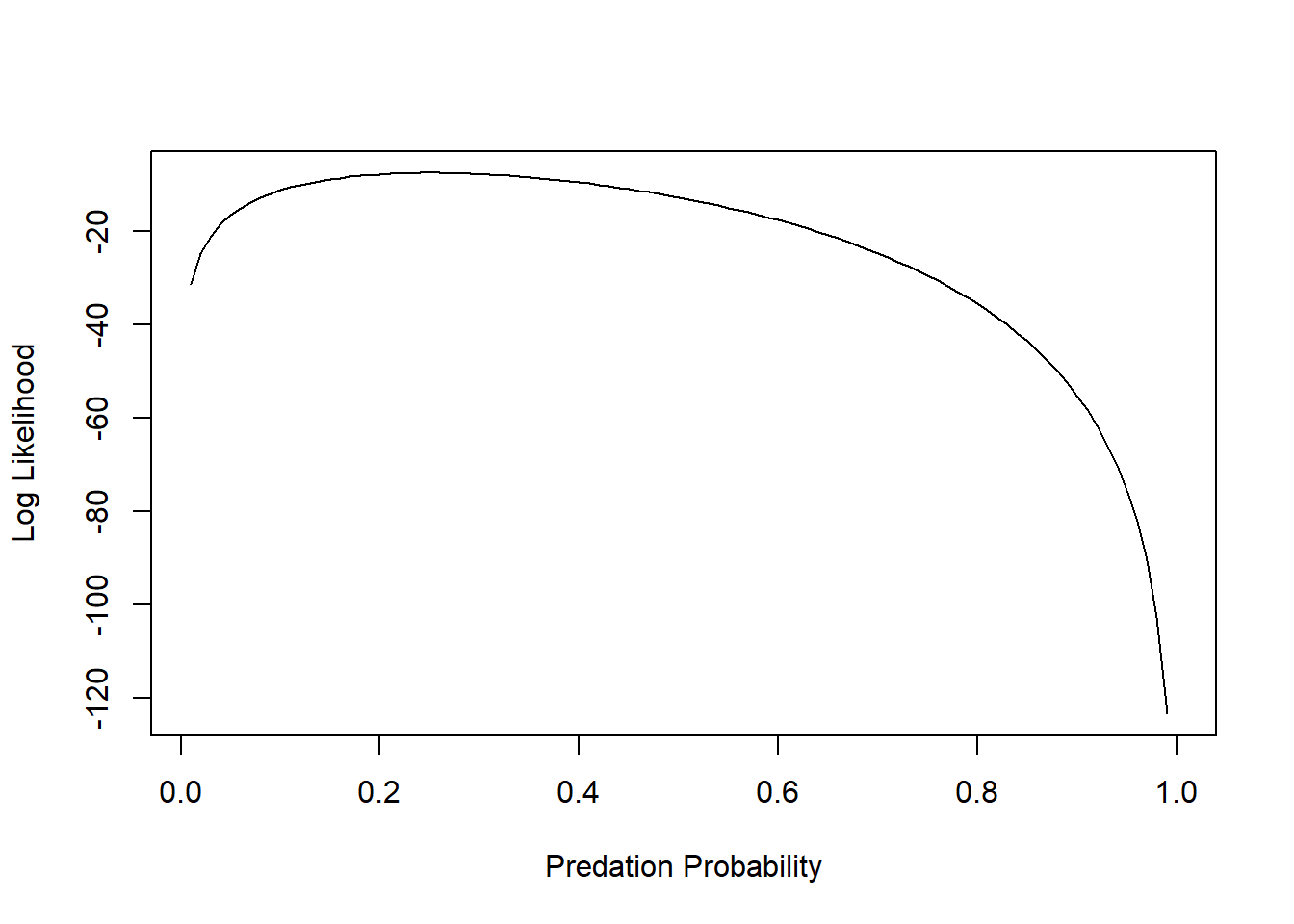

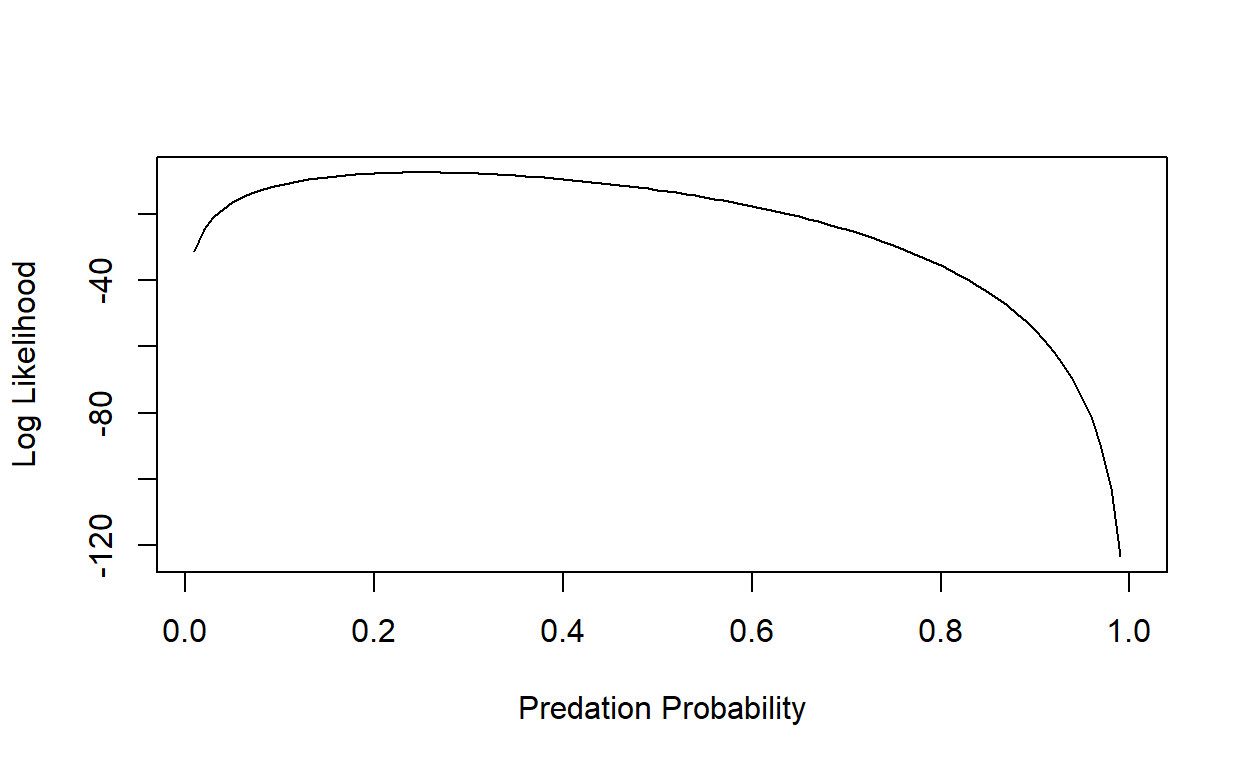

Let’s look at the log-likelihood:

# plot out the log-likelihood

LogLik <- sapply(p_seq, function(t) sum(dbinom(rfp_sub$killed,10,prob=t,log=T)) )

plot(LogLik~p_seq,lty="solid",type="l", xlab="Predation Probability", ylab="Log Likelihood")

The maximum likelihood estimate (MLE) is the parameter value that maximizes the likelihood function.

p_seq[which.max(LogLik)] # MLE for probability of predation## [1] 0.25And we can add an “abline()” to indicate the maximum Log-Likelihood estimate:

plot(LogLik~p_seq,lty="solid",type="l", xlab="Predation Probability", ylab="Log Likelihood")

abline(v=0.25,lwd=3)

Alternatively, we can use the optim() or mle2() functions to find the maximum likelihood estimate.

Although we want the maximum likelihood estimate, in practice we generally minimize the negative log-likelihood. To do this, we first write a function to calculate the negative log-likelihood function:

# Write a likelihood function

binomNLL1 <- function(p) {

-sum(dbinom(rfp_sub$killed, size=10, prob=p, log=TRUE))

}As we did in class, you can use the ‘optim()’ function to minimize your negative log-likelihood function (‘binomNLL1()’) given a vector of starting parameters and your data. The starting parameters need not be accurate, but do need to be reasonable for the function to work, that’s why we spent time in class eyeballing curves (also read the Bolker book for a discussion of the ‘method of moments’, which can help you get reasonable starting values!). Given that there is only one estimable parameter, p, in the binomial function, you only need to provide a starting estimate for it. Calculate the negative log-likelihood:

# use "optim()" to find the MLE

opt1 <- optim(fn=binomNLL1, par = c(p=0.5), method = "BFGS") # use "optim()" to estimate the parameter value that maximizes the likelihood function You may get several warning messages, can you think why? opt1 returns a list that stores information about your optimization process.

opt1 # check out the results of "optim()"## $par

## p

## 0.2500002

##

## $value

## [1] 7.571315

##

## $counts

## function gradient

## 17 7

##

## $convergence

## [1] 0

##

## $message

## NULLMLE = opt1$par

MaxLik = opt1$valueThe important bits are the parameter estimate and the maximum likelihood value.

But the first thing to check is whether or not the optimizer achieved convergence.

opt1$convergence## [1] 0Here a value of 0 means convergence has been achieved, a value of 1 means the process failed to converge. There is more info about convergence and alternative optimization options in Chapter 7 of the Bolker book.

This numerically computed answer is (almost exactly) equal to the theoretical answer of 0.25.

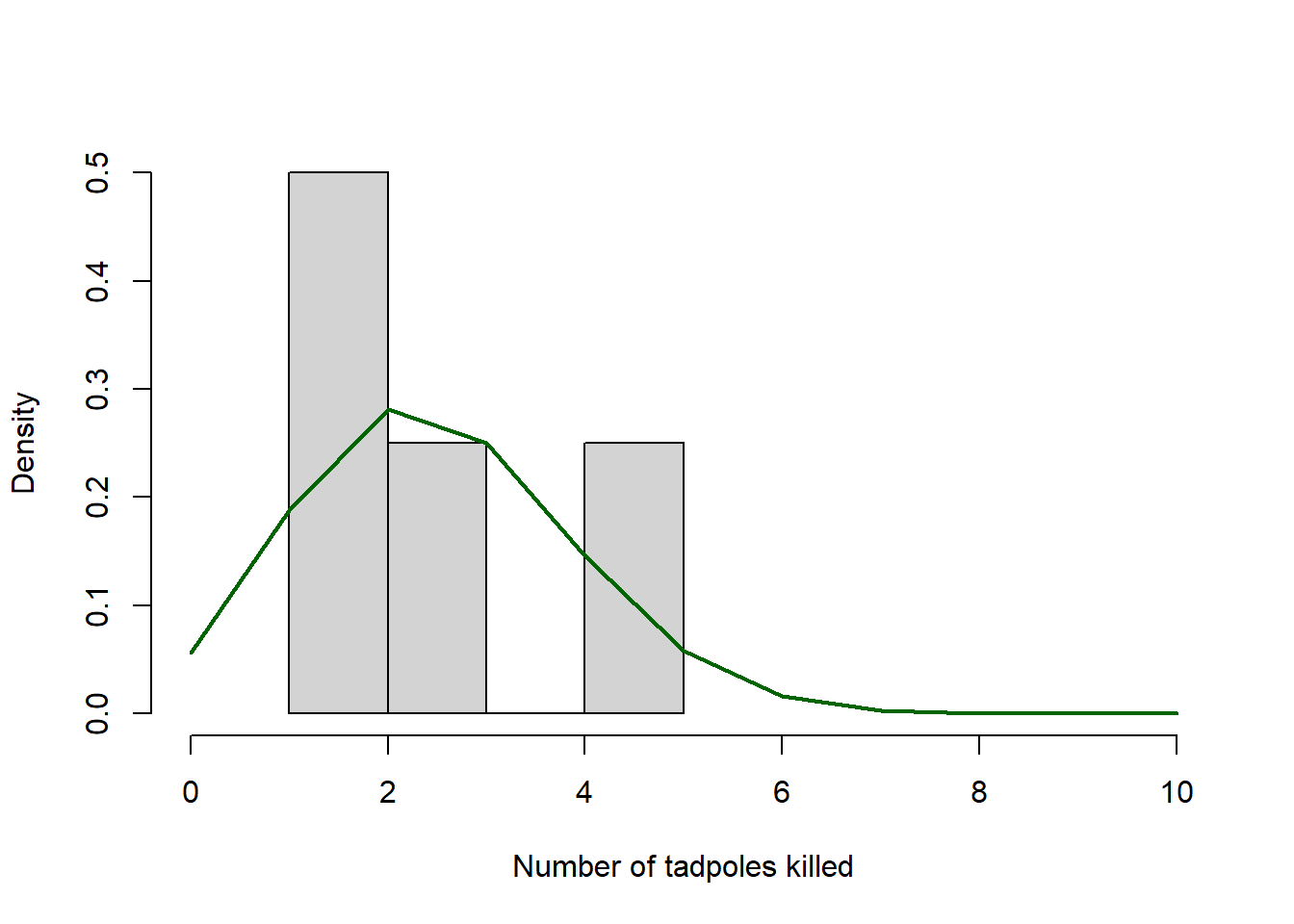

Plot your observed outcomes against your predictions under the maximum likelihood model:

hist(rfp_sub$killed,xlim=c(0,10),freq=F,main="",xlab="Number of tadpoles killed")

curve(dbinom(x,prob=MLE,size=10),add=T,from=0,to=10,n=11,lwd=2, col="darkgreen")

Note that “freq=F” scales the y-axis of a histogram to “density”, which allows us to overlay probability density functions.

Exercise 3.1: building a custom likelihood function

In this exercise you will develop and use a likelihood function for the following scenario: you are a frog biologist studying a rare species of a wetland-obligate frog species. You visit three known-occupied wetland sites ten (10) times and for each site you record the number of visits for which your target species is detected (at least one call within a 5 minute period). Your data are as follows: 3,2 and 6 detections for each of the three sites respectively.

Exercise 3.1a.

Write a likelihood function called “NLL_frogOccupancy()” for computing the negative log-likelihood for the above scenario. Your likelihood function should compute the negative log-likelihood of these data: [3,2 and 6 detections for sites 1, 2, and 3 respectively] for any detection probability \(p\), assuming that all sites are continuously occupied. You should assume for now that all sites have the same detection probability.

- input:

- p = a single number specifying a proposed value (initial value) for the parameter “p” (probability of detection for a single visit – which happens to be the only free parameter in this model)

- suggested algorithm:

- up to you! (HINT: use binomial distribution) (NOTE: do not use ‘optim()’ here: you are just asked to compute the likelihood at any specified parameter (input argument “p”), not to find the maximum likelihood estimate)

- return:

- the negative log likelihood of your data (a single number)

Include your function in your submitted r script!

And test your function! For example:

NLL_frogOccupancy(p=0.5) # test your function## [1] 6.853154Exercise 3.1b.

On WebCampus please respond briefly to the following questions:

- What is the approximate maximum likelihood estimate for the

p (detection probability) parameter? How did you use your

likelihood function to get your answer (briefly)?

- Using the “rule of 2”, what is the approximate 95% confidence interval for the p (probability of detection) parameter.

Exercise 3.2: Adding a Deterministic Relationship

So we’ve looked at how to obtain the likelihood given a stochastic model (the binomial distribution), but now we can consider more interesting questions – like the effects of covariates on our process of interest.

Recall that we subset our data above because we expected survival to be (in part) density-dependent. Here we’ll consider how to model the probability of tadpoles being eaten as a function of the initial density of tadpoles in the population. Specifically, the response represents the number of tadpoles eaten by the predator (dragonfly larvae) over the 2-week experiment. The predictor variable is the initial density of tadpoles in each mesocosm.

First, examine the first few lines:

# 3.2a ------------------

rffr <- emdbook::ReedfrogFuncresp # from Bolker's "emdbook" package

# ?Reedfrog # learn more about this dataset

head(rffr)## Initial Killed

## 1 5 1

## 2 5 2

## 3 10 5

## 4 10 6

## 5 15 10

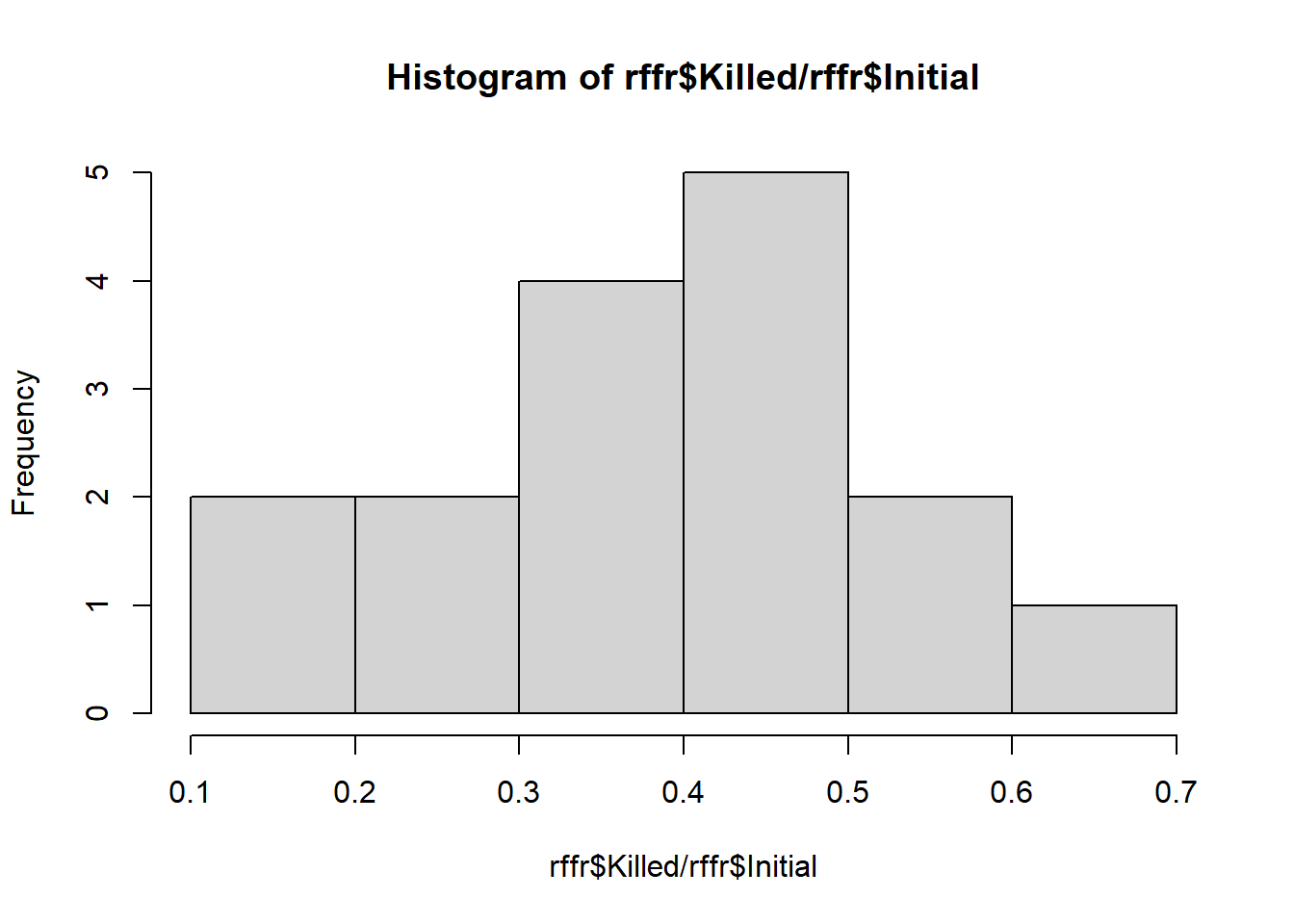

## 6 15 9Let’s look at the distribution of the data (probability of being killed).

hist(rffr$Killed/rffr$Initial)

Based on what we know about the data, let’s use a binomial distribution to describe the observed number of tadpoles killed.

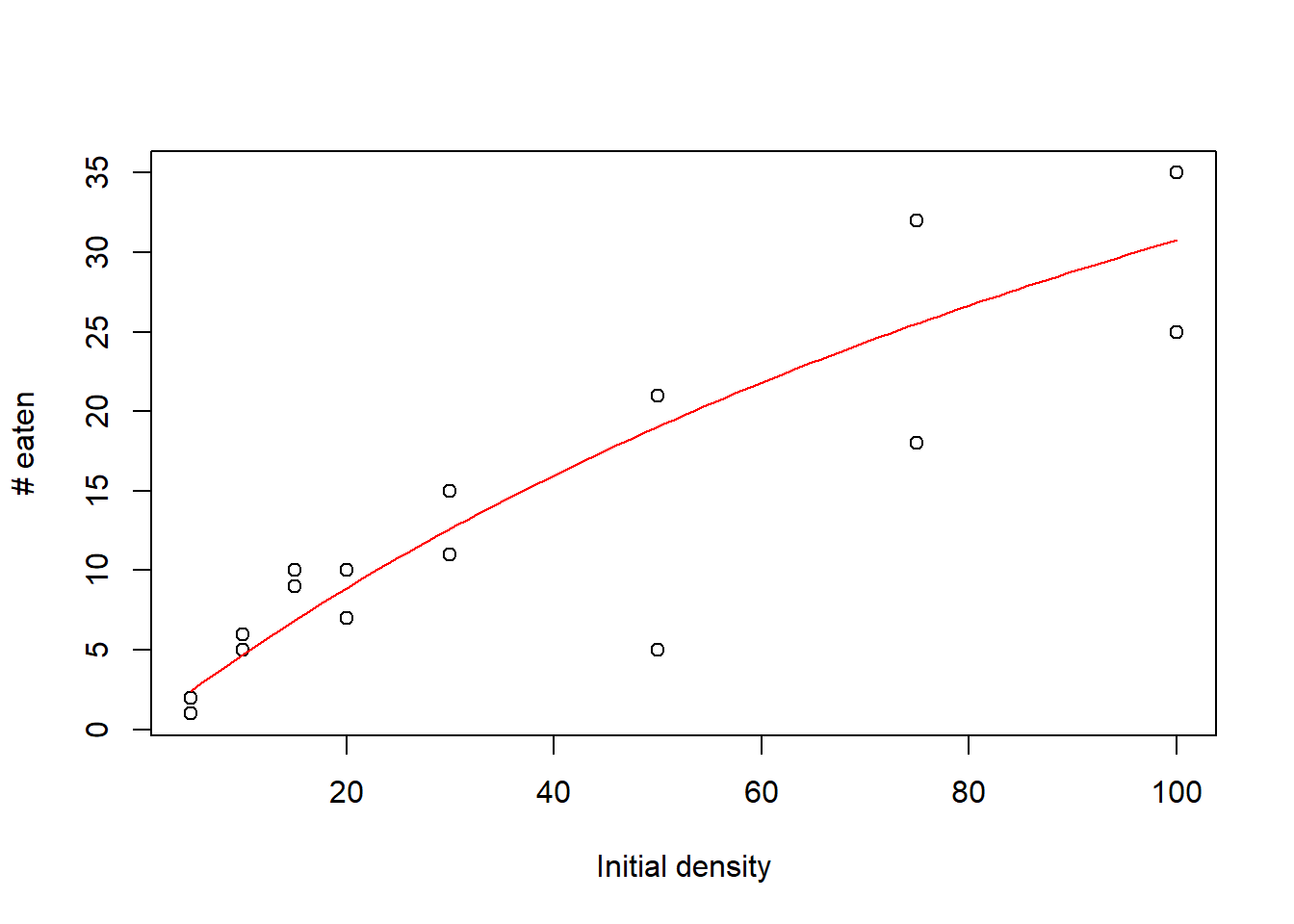

Plot the number of tadpoles killed as a function of the initial density (e.g., using ggplot()) to see what sort of deterministic function would describe the pattern.

It looks like it could be linear, but we know that predators become handling-limited (saturated) at high prey densities.

On page 182 Bolker indicates that if predation rate= \(aN/(1+ahN)\) (saturation curve, known in ecology as a Holling Type II functional response), this means that the per-capita predation rate of tadpoles decreases with tadpole density hyperbolically:

\[PredRate = (a/(1 + ahN))\]

We’ll use this deterministic function for our data.

First, let’s see what that saturation curve would look like over our data points with an initial guess at the parameters (we always need an initial guess to get our optimization algorithm started).

Recall that the a parameter of this hyperbolic function indicates the initial slope, which we’ll guess to be around 0.5, and the h parameter indicates 1/asymptote, which we fiddled around with to match the data (so try 1/80).

This looks pretty good, but we want to actually fit the line to the data instead of making guesses, and we’ll use maximum likelihood to do that.

Just like before, we’ll write a negative log likelihood function, but this time we’ll incorporate the deterministic model!

Exercise 3.2a.

Write a function called “binomNLL2()” for computing the data likelihood for this model. This likelihood function should reflect that the data are drawn from a binomial distribution (either killed or not), and that the probability of predation (the number killed divided by the initial number) is explained by the Holling type II equation.

- input:

- params = named vector of initial values for the params to estimate (length 2, with elements named ‘a’ and ‘h’ from the Holling type II functional response- in that order)

- suggested algorithm:

- up to you! Make sure you use the Holling type II function to model the number killed at each density. Use the ‘dbinom()’ function to represent the stochastic process that generated these data. Note that the probability of being eaten (p parameter in the binomial distribution) can be computed as the expected number killed divided by the total initial density.

- return:

- the negative log-likelihood of the data under this model

You can test your function using something like the following:

binomNLL2(c(a=0.4,h=1/200))## [1] 4.706114Now we can find the parameter values that best describe these data using ‘optim()’. We’ll use the same initial values for a and h that we used to plot the curve. N is the initial number of tadpoles, and k is the number of tadpoles killed.

opt2 <- optim(c(a=0.5,h=(1/80)), binomNLL2) #use default simplex algorithm

MLE = opt2$par

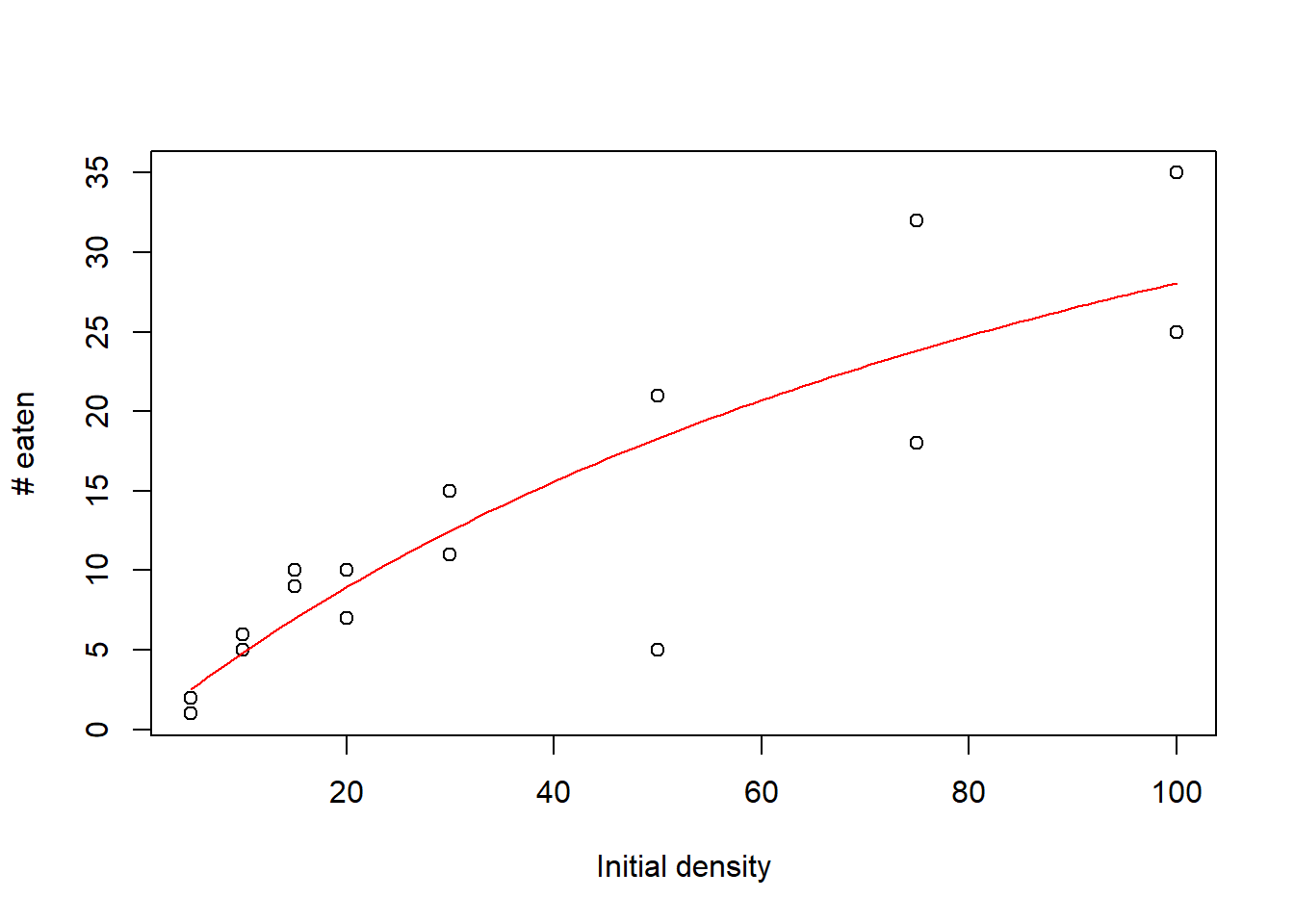

MaxLik = opt2$valueThe results are not that different from our starting values, so we made a good guess!

Let’s visualize the results!

plot(rffr$Killed~rffr$Initial, xlab="Initial density",ylab="# eaten")

curve(Holl2(x, a=MLE["a"], h=MLE["h"]), add=TRUE,col="red")

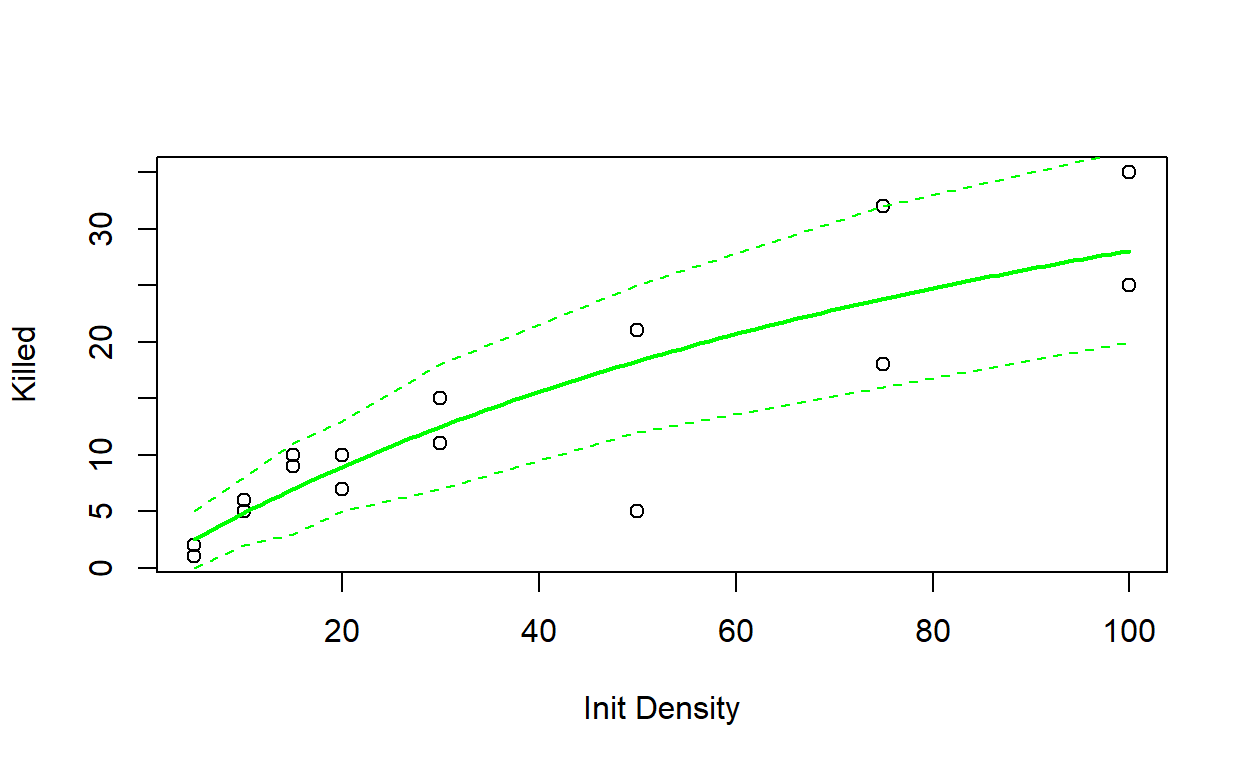

Exercise 3.2b.

Write a function called “Rffuncresp()” for plotting the goodness-of-fit for this model.

- input:

- params = named vector containing the MLE for the params in this

model (length 2: a and h from the Holling type II functional response-

in that order, named “a” and “h”, respectively)

- data = a data frame of 2 columns and one row per observation. The first column should represent the initial prey densities, and the second column should represent the number eaten by the predator over the 2-week experiment.

- params = named vector containing the MLE for the params in this

model (length 2: a and h from the Holling type II functional response-

in that order, named “a” and “h”, respectively)

- suggested algorithm:

- Plot the observed number killed (y axis) vs the initial

densities

- Overlay a line to visualize the expected number killed based on the MLE parameters.

- Finally, visualize “plug-in prediction intervals” around your MLE line to make a plot like Figure 6.5a in the Bolker book. You can use “qbinom()” to define the 90% quantiles of the binomial distribution for every point along your curve (see below).

- Plot the observed number killed (y axis) vs the initial

densities

- return:

- a data frame with 4 columns: The first column should represent a vector of initial densities that span the range of values in the experiment the second column should represent the expected number killed based on the MLE, the third and fourth columns should represent the lower and upper bounds of the 90% ‘plug-in’ confidence interval, respectively.

Include your function in your submitted r script!

You can test the function using something like this:

Rffuncresp(params=MLE,data=rffr)

## x y lwr upr

## 1 3 1.537532 0 3

## 2 4 2.032743 0 4

## 3 5 2.519665 0 5

## 4 6 2.998505 1 5

## 5 7 3.469463 1 6

## 6 8 3.932732 1 7

## 7 9 4.388498 2 7

## 8 10 4.836943 2 8

## 9 11 5.278241 2 8

## 10 12 5.712562 2 9

## 11 13 6.140069 3 10

## 12 14 6.560923 3 10

## 13 15 6.975277 3 11

## 14 16 7.383281 4 11

## 15 17 7.785079 4 12

## 16 18 8.180812 4 12

## 17 19 8.570617 4 13

## 18 20 8.954625 5 13

## 19 21 9.332964 5 14

## 20 22 9.705761 5 14

## 21 23 10.073134 6 15

## 22 24 10.435203 6 15

## 23 25 10.792081 6 16

## 24 26 11.143878 6 16

## 25 27 11.490703 7 17

## 26 28 11.832660 7 17

## 27 29 12.169851 7 17

## 28 30 12.502375 7 18

## 29 31 12.830328 8 18

## 30 32 13.153804 8 19

## 31 33 13.472894 8 19

## 32 34 13.787686 8 19

## 33 35 14.098267 9 20

## 34 36 14.404720 9 20

## 35 37 14.707128 9 21

## 36 38 15.005569 9 21

## 37 39 15.300122 9 21

## 38 40 15.590863 10 22

## 39 41 15.877863 10 22

## 40 42 16.161196 10 22

## 41 43 16.440931 10 23

## 42 44 16.717136 11 23

## 43 45 16.989877 11 23

## 44 46 17.259220 11 24

## 45 47 17.525227 11 24

## 46 48 17.787961 11 24

## 47 49 18.047480 12 25

## 48 50 18.303844 12 25

## 49 51 18.557110 12 25

## 50 52 18.807334 12 26

## 51 53 19.054570 12 26

## 52 54 19.298871 13 26

## 53 55 19.540290 13 27

## 54 56 19.778877 13 27

## 55 57 20.014681 13 27

## 56 58 20.247752 13 27

## 57 59 20.478135 13 28

## 58 60 20.705878 14 28

## 59 61 20.931026 14 28

## 60 62 21.153622 14 29

## 61 63 21.373711 14 29

## 62 64 21.591333 14 29

## 63 65 21.806530 15 29

## 64 66 22.019343 15 30

## 65 67 22.229811 15 30

## 66 68 22.437973 15 30

## 67 69 22.643865 15 30

## 68 70 22.847526 15 31

## 69 71 23.048990 16 31

## 70 72 23.248295 16 31

## 71 73 23.445473 16 31

## 72 74 23.640559 16 32

## 73 75 23.833586 16 32

## 74 76 24.024587 16 32

## 75 77 24.213593 16 32

## 76 78 24.400635 17 33

## 77 79 24.585744 17 33

## 78 80 24.768949 17 33

## 79 81 24.950281 17 33

## 80 82 25.129766 17 33

## 81 83 25.307434 17 34

## 82 84 25.483312 17 34

## 83 85 25.657426 18 34

## 84 86 25.829804 18 34

## 85 87 26.000471 18 35

## 86 88 26.169452 18 35

## 87 89 26.336773 18 35

## 88 90 26.502457 18 35

## 89 91 26.666528 18 35

## 90 92 26.829011 19 36

## 91 93 26.989927 19 36

## 92 94 27.149299 19 36

## 93 95 27.307151 19 36

## 94 96 27.463502 19 36

## 95 97 27.618375 19 37

## 96 98 27.771790 19 37

## 97 99 27.923768 19 37

## 98 100 28.074330 20 37

## 99 101 28.223493 20 37

## 100 102 28.371279 20 37NOTE: the “prediction interval” you are asked to generate here is sometimes called a “plug-in” prediction interval, and is a quick and dirty way to assess model goodness-of-fit. You just take the best-fit model (with parameter values at their MLE values) and use the quantiles of the stochastic process (here, the binomial distribution) to define the range of data that would generally be produced under this model.

NOTE: For this exercise, you can use the ratio of the tadpoles killed versus the initial tadpole density values to get a probability (probability of being killed), which you can then use as an input parameter for the binomial distribution. For example:

# how to generate 'plug-in' prediction intervals

xvec <- seq(40,80,5)

yvec <- 0.5/(1+0.5*0.015*xvec) * xvec

upper<-qbinom(0.975,prob=yvec/xvec, size=xvec)

lower<-qbinom(0.025,prob=yvec/xvec, size=xvec)

upper

lowerExercise 3.2c.

On WebCampus, respond briefly to the following question:

Bolker calls the prediction interval you generated above a plug-in prediction interval. In what way(s) is this interval different than a true prediction interval? Would you feel comfortable reporting a ‘plug-in’ prediction interval in a scientific publication, or to stakeholders interested in your model results?

Review of the MLE process

Step 1. Identify the response and explanatory variables (e.g., Predation probability and Initial Population Size). Just stating what the response and explanatory variables are will help you start modeling.

Step 2. Determine one or more candidate stochastic process(es) that may govern variability among observations in your system (e.g., error is Binomially distributed). In the tadpole case, the stochastic distribution was easy to identify because the data were in the form of successes in a finite set of trials (which yields a binomial distribution under independence assumptions). Other times it may not be so clear what the best distribution is, and looking at the histogram and plotting different distributions over the top will be helpful.

Step 3. Specify the deterministic function – e.g., a mathematical function that governs the relationship between a predictor variable and the mean response (e.g., Holling type II). Here, we chose this function mechanistically – we expect predator functional response to saturate with increasing prey density.

Step 4. Write a function for expressing the negative log-likelihood of the data given our deterministic expectations and the stochastic distribution. Our negative log likelihood function in the tadpole functional response example combined the stochastic and deterministic elements together by having the stochastic parameter (in this case the binomial probability, p) be dependent upon the deterministic parameters.

Step 5. Make a guess for the initial parameters (e.g., a=0.5, h=1/80). You need to have an initial guess at the parameters to make ‘optim()’ work, and we plotted the Holling curve to make our guess. Sometimes you will also need to make a guess at the parameters for the stochastic distribution. In these cases, the method of moments is often a good and simple option for finding the parameters of non-normal probability distributions (see Bolker book for details).

Step 6. Estimate the best-fit parameters using maximum likelihood. We used optim() to search through all possible value combinations of parameters a and h to estimates for those parameters that correspond to the minimum negative log-likelihood.

Step 7. Add confidence or prediction intervals around your estimates to represent uncertainty. We calculated some plug-in estimates to put pseudo-prediction intervals around our estimates based on the stochastic distribution.

Exercise 3.3: Likelihood and Confidence Intervals

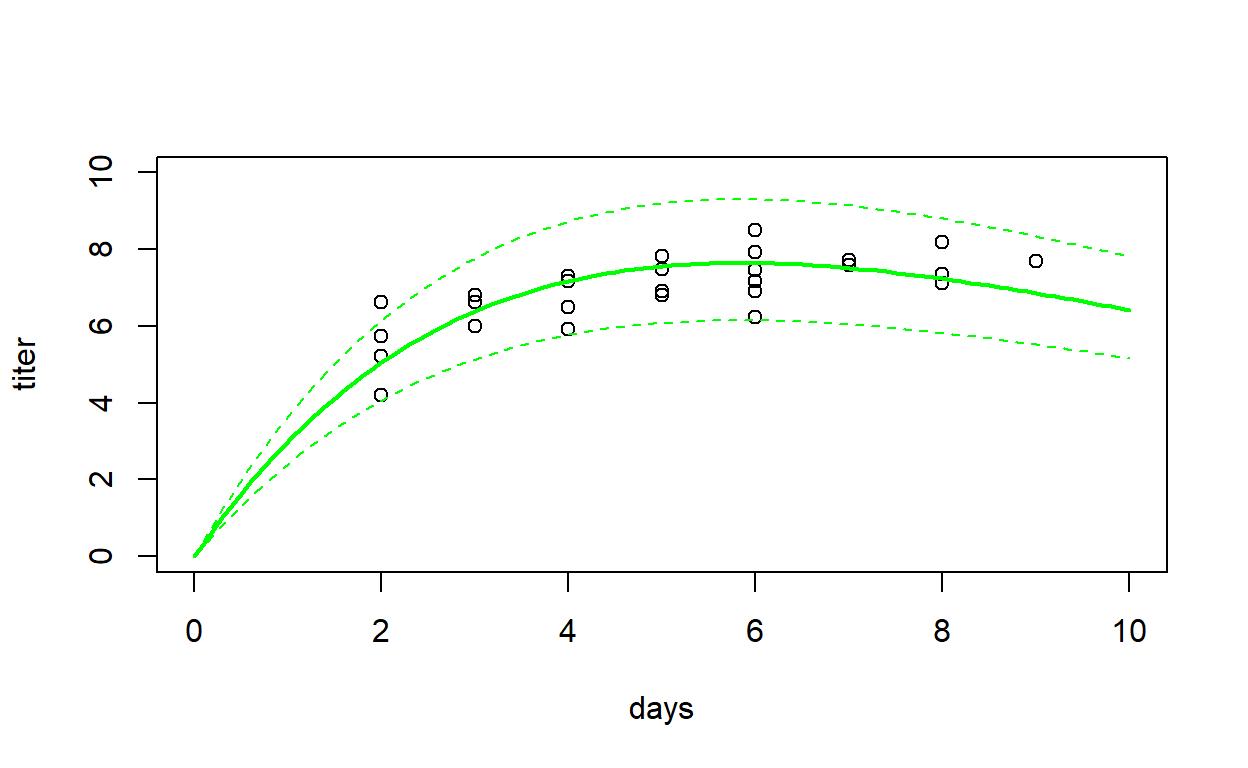

Let’s continue the myxomatosis virus titer example from the optimization lecture. The difference is that this time we’ll model a deterministic process (change in viral loads over time) in addition to the stochastic process. Our goal is to fit a model to data on viral titers through time for the viruses that are grade 1 (highest virulence). Biological common sense, and data from other titer levels, tells us to expect titer levels to start at zero, increase over time to a peak, and then to decline. Given those expectations, we’ll fit a Ricker model to these data, following Bolker’s example and extending it just a bit.

Our goals are to:

- Find the maximum likelihood estimates of the parameters of the

Ricker model fit to the myxomatosis data.

- Visualize the fit of this model to the data by:

- Plotting the data

- Adding the predicted Ricker curve

- Adding plug-in confidence intervals

- Computing confidence intervals for MLE params

- Computing confidence intervals for predictions

You can add the data from the ‘emdbook’ package. Select just the grade 1 titers:

# Exercise 3.3a -----------

library(emdbook)

data(MyxoTiter_sum) # load the data

# head(MyxoTiter_sum)

myxdat <- subset(MyxoTiter_sum, grade==1) # select just the most virulent strain

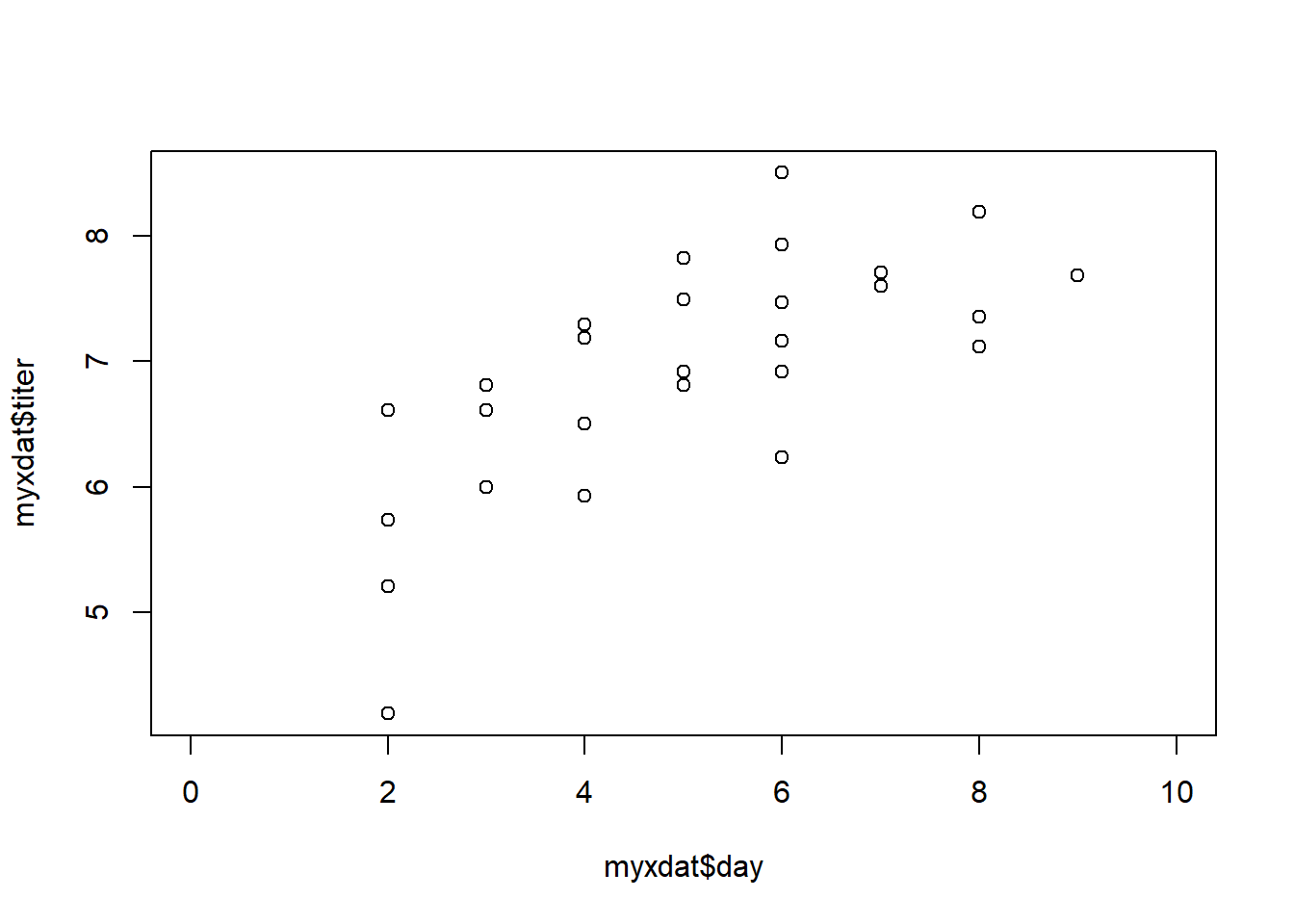

plot(myxdat$titer~myxdat$day,xlim=c(0,10)) # visualize the relationship

Exercise 3.3a.

Fit a Ricker model to the myxomatosis data – that is, use a Ricker deterministic equation to model the expected (mean) virus titer as a function of days since infection (see below for step-by step instructions).

Write a function called “NLL_myxRicker()” for computing the data likelihood and plotting the goodness-of-fit for this model.

- input:

- params = named vector of initial values for the params to estimate (length 3: a and b params from the Ricker model [see below], and the rate parameter of the Gamma distribution)(names should be “a”,“b”, and “rate”)

- suggested algorithm:

- Compute the deterministic function: use a Ricker deterministic equation to model the mean virus titer as a function of days since infection

- Compute the “shape” parameter of the Gamma distribution as a function of (1) mean virus titer and (2) the “rate” parameter of the Gamma distribution [NOTE: the variance and the mean of the Gamma distribution are dependent- so we can’t simply model the expected value and the noise separately! See below for more details]

- Compute the sum of the negative log-likelihoods of all observations (use the “dgamma()” function)

- NOTE: you can assume the existence of the “myxdat” data object in the workspace, exactly as in the above code block.

- return:

- the negative of the sum of the log-likelihoods of all observations (a single number)

This task (develop likelihood function) can be broken down into a few steps, just like we did above!

Our question is: how does a virus titer change in rabbits as a function of time since infection? This is almost exactly the same problem as we just did (above), but we’re using different distributions and functions.

Step 1. Identify the response and explanatory variables. The response is the virus titer and the explanatory variable is the days since infection.

Step 2. Determine the stochastic

distribution.

Start by plotting the histogram of the response variable. Bolker

suggests a gamma distribution – does it look like a gamma would work?

Write down the parameters for the gamma distribution (page 133). (For

the gamma distribution, use with the shape and scale parameters (not the

rate)).

Step 3. Specify the deterministic

function.

Plot the data points (hint: look at figure 6.5b). Bolker suggests the

Ricker curve – does it look like the Ricker curve would work? Write down

the equation and parameters for the Ricker curve (page 94).

Step 4. Specify the likelihood of the data given our deterministic expectations and the stochastic distribution. Take a moment to think how the parameters of the stochastic distribution are determined by the parameters of the deterministic function. For the gamma distribution, both the shape and scale parameters are related to the mean of the distribution, i.e., mean = shape / rate (page 133)(or shape * scale). So how will you specify that the deterministic function (the Ricker model) should represent the mean? What parameters do you need your (negative) log-likelihood function to estimate? Write out your negative log-likelihood function to solve for the likelihood.

ASIDE: the mean and variance of the gamma distribution are inter-related: the shape parameter can be specified as: \(\frac{mean^2}{var}\) and the rate parameter can be specified as \(\frac{mean}{var}\)!

Include your function in your submitted r script!

You can test your function using something like this:

NLL_myxRicker(params=c(a=4,b=0.2,rate=2)) # test the function## [1] 44.56117We can then optimize our likelihood function using ‘optim()’. This takes us through the next couple steps in the maximum likelihood process outlined above.

Step 5. Make a guess for the initial parameters. We need initial parameters to put into “optim()”. Remember that the Ricker curve parameters can be estimated based on the initial slope and maximum (see pg. 95). Try plotting the curve over the points to get an approximate fit. There really isn’t any easier way to get there than trial and error for the deterministic function.

Step 6. Estimate the best fit parameters using

maximum likelihood.

Now use ‘optim()’ to get your maximum likelihood parameter

estimates.

temp <- optim(NLL_myxRicker,par = c(a=2,b=0.2,rate=2))

MLE = temp$par

MinNLL = temp$value

MLE## a b rate

## 3.4736659 0.1674329 14.4231248Exercise 3.3b.

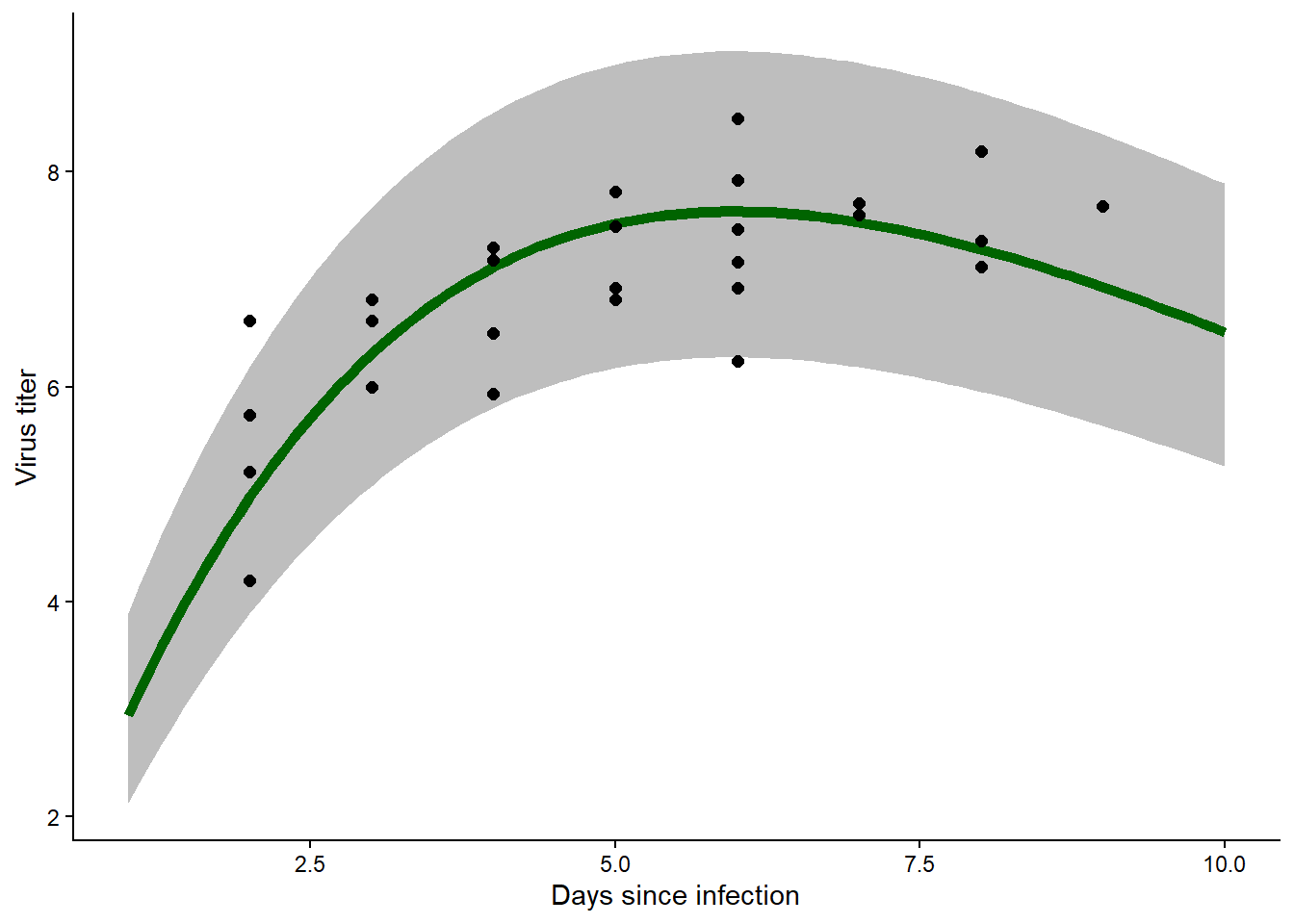

Write a function called “predict_myxRicker1()” for computing the maximum likelihood estimates and plotting the goodness-of-fit for this model.

- input:

- mle = named vector of MLE values for the model params (length 3: a and b params from the Ricker model [see below], and the rate parameter of the Gamma distribution) (named “a”, “b”, and “rate”, in that order)

- suggested algorithm:

- make a data frame with a column “x” spanning the range of the time-since-infection (predictor variable, in days). [I suggest making this vector at least 100 elements]

- add a column with the predictions from the Ricker model based on the MLE parameters (just the Ricker parameters) across the range of times since exposure.

- add columns to denote the lower and upper bounds of the plug-in

prediction interval around your MLE predictions. Hint: use “qgamma()” to

define the 95% quantiles of the Gamma distribution for every point along

your curve (see below).

- Use ggplot to plot the observed virus titer (y axis) vs the days since infection (x axis).

- Overlay a line to visualize your predictions from the Ricker model

- Finally, visualize “plug-in prediction intervals” around your MLE line to make a plot similar to Figure 6.5a in the Bolker book.

- return:

- df: a data frame with 4 columns:

- x, representing a vector ranging across the time span of the experiment (in units of days)

- mean, representing the expected virus titer under the MLE parameters

- lwr, representing the lower bound of the plug-in prediction interval

- upr, representing the upper bound of the plug-in prediciton interval

- df: a data frame with 4 columns:

NOTE: you can assume (1) that a function “NLL_myxRicker()” is available in the global environment and that the “myxdat” data object is also available for use.

To complete this exercise will involve going through the final step (7) in the process outlined above:

Step 7. Add confidence/prediction intervals around your estimates

After your run of ‘optim()’ (did you achieve convergence?), plot your fitted Ricker curve to your data. Revisit the earlier prediction interval code to add plug-in prediction intervals around your predicted curve based on gamma distributed errors (should resemble Figure 6.5b on page 184 of text).

NOTE: You can use the “qgamma()” function to build your plug-in intervals!

# Plug-in prediction intervals!

upper<-qgamma(0.975,shape=?, rate=?) # remember that the mean of the gamma distribution is shape*scale

lower<-qgamma(0.025,shape=?, rate=?)Include your function in your submitted r script!

And as always, make sure to test your function code using something like this:

predict_myxRicker1(mle=MLE) # test the function

## x mean lwr upr

## 1 1.000000 2.938143 2.120906 3.886493

## 2 1.090909 3.156828 2.307206 4.137580

## 3 1.181818 3.368237 2.488359 4.379257

## 4 1.272727 3.572538 2.664315 4.611914

## 5 1.363636 3.769898 2.835055 4.835904

## 6 1.454545 3.960480 3.000586 5.051546

## 7 1.545455 4.144444 3.160936 5.259131

## 8 1.636364 4.321947 3.316148 5.458932

## 9 1.727273 4.493141 3.466273 5.651200

## 10 1.818182 4.658177 3.611376 5.836174

## 11 1.909091 4.817201 3.751526 6.014078

## 12 2.000000 4.970358 3.886798 6.185125

## 13 2.090909 5.117789 4.017271 6.349517

## 14 2.181818 5.259632 4.143028 6.507449

## 15 2.272727 5.396021 4.264154 6.659105

## 16 2.363636 5.527089 4.380735 6.804663

## 17 2.454545 5.652967 4.492861 6.944295

## 18 2.545455 5.773780 4.600619 7.078166

## 19 2.636364 5.889653 4.704099 7.206434

## 20 2.727273 6.000708 4.803390 7.329255

## 21 2.818182 6.107064 4.898582 7.446778

## 22 2.909091 6.208838 4.989762 7.559145

## 23 3.000000 6.306143 5.077021 7.666498

## 24 3.090909 6.399091 5.160445 7.768973

## 25 3.181818 6.487793 5.240122 7.866700

## 26 3.272727 6.572354 5.316137 7.959809

## 27 3.363636 6.652880 5.388576 8.048424

## 28 3.454545 6.729474 5.457522 8.132666

## 29 3.545455 6.802235 5.523060 8.212652

## 30 3.636364 6.871263 5.585270 8.288499

## 31 3.727273 6.936653 5.644233 8.360316

## 32 3.818182 6.998499 5.700028 8.428214

## 33 3.909091 7.056894 5.752735 8.492299

## 34 4.000000 7.111928 5.802429 8.552673

## 35 4.090909 7.163689 5.849187 8.609438

## 36 4.181818 7.212263 5.893083 8.662691

## 37 4.272727 7.257736 5.934189 8.712530

## 38 4.363636 7.300188 5.972578 8.759046

## 39 4.454545 7.339702 6.008320 8.802332

## 40 4.545455 7.376356 6.041485 8.842476

## 41 4.636364 7.410228 6.072140 8.879566

## 42 4.727273 7.441394 6.100352 8.913685

## 43 4.818182 7.469927 6.126187 8.944916

## 44 4.909091 7.495899 6.149708 8.973342

## 45 5.000000 7.519383 6.170978 8.999039

## 46 5.090909 7.540447 6.190060 9.022085

## 47 5.181818 7.559158 6.207013 9.042555

## 48 5.272727 7.575584 6.221896 9.060523

## 49 5.363636 7.589788 6.234769 9.076059

## 50 5.454545 7.601835 6.245687 9.089235

## 51 5.545455 7.611785 6.254706 9.100117

## 52 5.636364 7.619701 6.261880 9.108773

## 53 5.727273 7.625640 6.267264 9.115268

## 54 5.818182 7.629661 6.270909 9.119666

## 55 5.909091 7.631821 6.272867 9.122027

## 56 6.000000 7.632174 6.273188 9.122414

## 57 6.090909 7.630776 6.271919 9.120884

## 58 6.181818 7.627678 6.269111 9.117496

## 59 6.272727 7.622932 6.264809 9.112307

## 60 6.363636 7.616589 6.259059 9.105370

## 61 6.454545 7.608698 6.251907 9.096741

## 62 6.545455 7.599308 6.243396 9.086471

## 63 6.636364 7.588465 6.233569 9.074612

## 64 6.727273 7.576215 6.222469 9.061214

## 65 6.818182 7.562605 6.210135 9.046325

## 66 6.909091 7.547676 6.196609 9.029994

## 67 7.000000 7.531473 6.181930 9.012267

## 68 7.090909 7.514037 6.166136 8.993189

## 69 7.181818 7.495409 6.149264 8.972805

## 70 7.272727 7.475629 6.131351 8.951158

## 71 7.363636 7.454737 6.112433 8.928291

## 72 7.454545 7.432770 6.092545 8.904244

## 73 7.545455 7.409765 6.071721 8.879059

## 74 7.636364 7.385760 6.049995 8.852774

## 75 7.727273 7.360789 6.027399 8.825428

## 76 7.818182 7.334887 6.003964 8.797058

## 77 7.909091 7.308088 5.979723 8.767701

## 78 8.000000 7.280424 5.954705 8.737392

## 79 8.090909 7.251929 5.928939 8.706167

## 80 8.181818 7.222634 5.902456 8.674059

## 81 8.272727 7.192568 5.875283 8.641101

## 82 8.363636 7.161763 5.847447 8.607326

## 83 8.454545 7.130248 5.818976 8.572766

## 84 8.545455 7.098050 5.789896 8.537450

## 85 8.636364 7.065198 5.760232 8.501409

## 86 8.727273 7.031719 5.730009 8.464673

## 87 8.818182 6.997639 5.699252 8.427270

## 88 8.909091 6.962984 5.667984 8.389227

## 89 9.000000 6.927779 5.636230 8.350572

## 90 9.090909 6.892049 5.604010 8.311332

## 91 9.181818 6.855818 5.571347 8.271531

## 92 9.272727 6.819109 5.538263 8.231196

## 93 9.363636 6.781944 5.504779 8.190350

## 94 9.454545 6.744346 5.470915 8.149018

## 95 9.545455 6.706336 5.436690 8.107222

## 96 9.636364 6.667936 5.402125 8.064987

## 97 9.727273 6.629166 5.367238 8.022332

## 98 9.818182 6.590045 5.332047 7.979281

## 99 9.909091 6.550593 5.296570 7.935854

## 100 10.000000 6.510830 5.260826 7.892072Exercise 4: Confidence Intervals on parameters

In this exercise you will construct confidence intervals that reflect our uncertainty about the parameter estimates in the Ricker/virus titer example. We will then construct prediction intervals that include our uncertainty about the parameter values.

Again, you can assume that there is a data frame in your environment named ‘myxdat’ with columns named ‘days’ and ‘titer’ that contain the data.

Exercise 3.4a: Gaussian approximation

The first step is to optimize your likelihood function, this time using the “hessian=T” argument to the ‘optim’ function:

# 3.4a -----------

temp <- optim(NLL_myxRicker,par = c(a=2,b=0.2,rate=2),hessian = T)

MLE = temp$par

MinNLL = temp$value

H = temp$hessian

H## a b rate

## a 224.5874208 -4080.39276 0.27045577

## b -4080.3927576 83586.61145 -4.73994026

## rate 0.2704558 -4.73994 0.06511682To compute confidence intervals, we first need to compute the standard error of the parameters. This is pretty easy if we remember that the MLE is asymptotically multivariate normally distributed with a variance-covariance matrix of \(H^{-1}\), where H is the hessian matrix (matrix of second partial derivatives of the negative log-likelihood function with respect to each parameter) evaluated at the MLE. In other words, the variance-covariance matrix of the parameter vector at the MLE is approximately (asymptotically) equal to the inverse of the hessian matrix of the negative log-likelihood function, evaluated at the MLE. This sounds complicated but is pretty simple to do in R.

Once you have computed the variance covariance matrix for the MLE parameters, you can compute the standard error as the square root of the diagonals of the variance covariance matrix (the diagonal elements represent variances). Finally, to compute a margin of error for a confidence interval, you can multiply the standard error by the appropriate quantile from a standard normal distribution- for example, for a 95% confidence interval you would multiply the sterr by 1.96, which is the 0.025 quantile of the standard normal distribution (if you exclude 0.025 from the top and the bottom of the distribution, the middle portion contains 95% of the probability mass. Finally, you can add and subtract this margin of error to compute a 95% confidence interval around each parameter.

Write a function called “CI_myxRicker1()” for computing a confidence interval for all free parameters in this model.

- input:

- mle = named vector containing the maximum likelihood values for the params (length 3: a and b params from the Ricker model [see below], and the rate parameter of the Gamma distribution)(names should be “a”,“b”, and “rate”)

- H = the hessian matrix obtained from optimizing the negative-log-likelihood function (i.e., from ‘optim()’ with ‘hessian=T’)

- suggested algorithm:

- compute variance-covariance matrix (VCM) from the hessian matrix as described above.

- extract the diagonal elements of the VCM and take the square root- this is the standard error of your parameters.

- compute the margin of error by multiplying the standard error of each parameter by the appropriate quantile of the z distribution (standard normal). In this case you want the 97.5% quantile.

- return:

- a matrix: each row is a parameter (in order: a, b, and rate) and there are four columns: first, the mle values, second, the standard error, third, the lower bound of the confidence interval, and fourth, the upper bound of the confidence interval.

HINT: to take the inverse of a matrix in R, you can use the “solve()” function.

You can test your function code using something like this:

CI_myxRicker1(MLE,H) # test the function## mle se lwr upr

## a 3.4736659 0.19852047 3.084573 3.8627589

## b 0.1674329 0.01028582 0.147273 0.1875927

## rate 14.4231248 3.92873690 6.722942 22.1233077Exercise 3.4b: confidence intervals on predictions

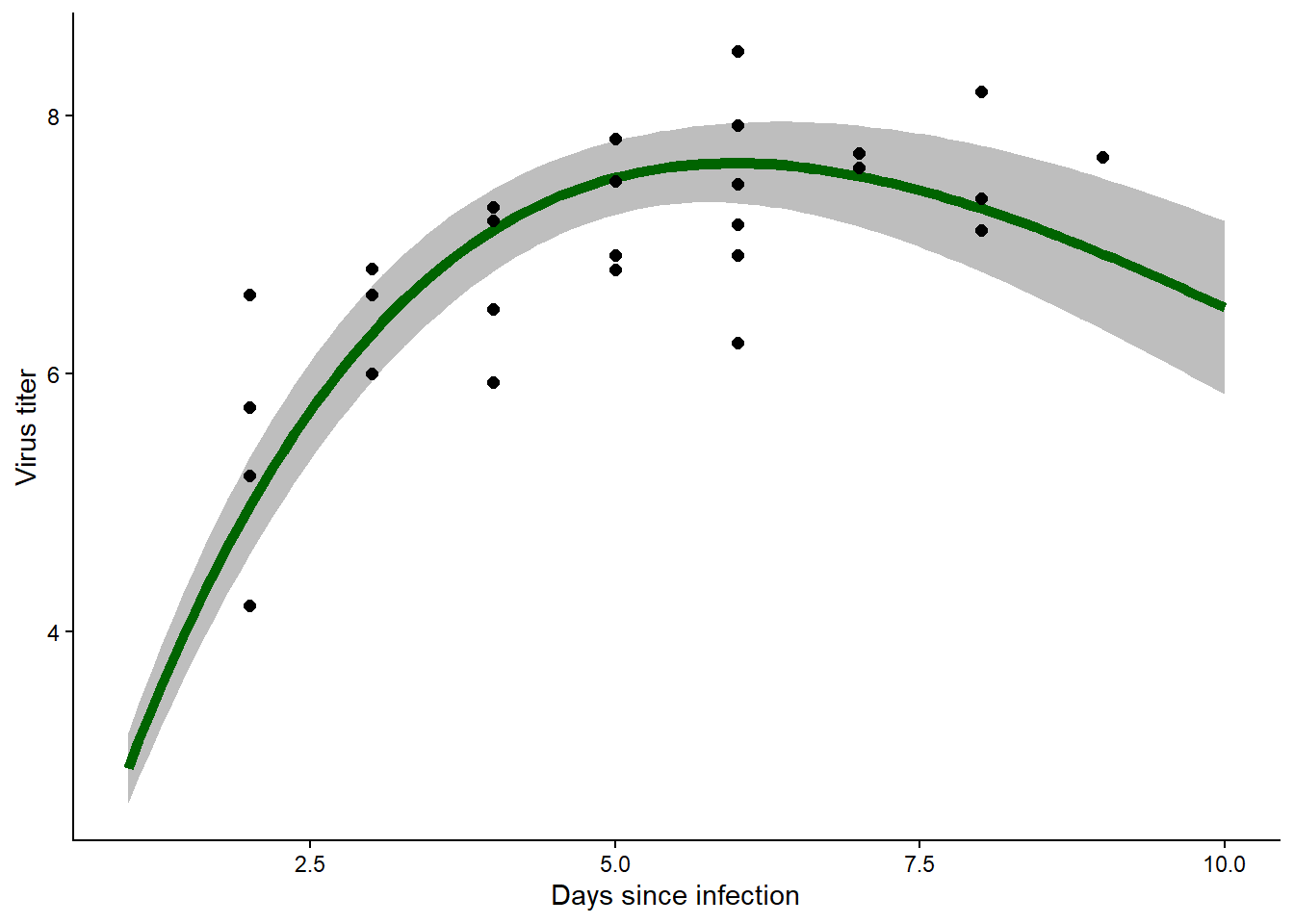

Write a function called “predict_myxRicker2()” that computes a 95% confidence interval for predicted titer values across a range of days-since-exposure.

To do this, we will use a nice trick called the delta method for computing the standard error of the expected titer computed from the MLE. The delta method is applicable any time we want to compute the standard error for a transformation of an asymptotically normally (or multivariate-normally) distributed quantity/vector. Mathematically, it is based on a Taylor expansion, so it is important that the transformation function is differentiable.

In this class, we will use the delta method to find the variance or standard error of arbitrary transformations of the MLE. To do this, we need the MLE estimates, and we need the variance-covariance matrix of the MLE estimates, which we can compute as the inverse of the hessian matrix of the negative-log-likelihood function.

\[VCV_{\theta, MLE} = {H_{\theta, MLE}}^{-1}\] Now consider some function of the MLE parameters, \(f(\vec{\theta})\). Recalling that the MLE is asymptotically normally distributed such that \(\hat{\theta} \sim MVN(\hat{\theta}_{MLE},VCV_{\theta, MLE})\), then we can compute the approximate variance of our new estimated quantity:

\[f(\theta) \sim Normal(f(\hat{\theta}_{MLE}),\nabla f(\hat{\theta}_{MLE})^T \times VCV_{\theta, MLE} \times \nabla f(\hat{\theta}_{MLE}) )\] That is, the variance of the transformed random variable is:

\[\nabla f(\hat{\theta}_{MLE})^T \times VCV_{\theta, MLE} \times \nabla f(\hat{\theta}_{MLE})\]

The standard error is just the square root of the variance. Finally, you can compute a confidence interval by multiplying the standard error by the desired quantile of the standard normal distribution. For a 95% CI, just multiply the standard error by the upper 0.025 quantile of the z distribution, and add and subtract this quantity from the mean value of the quantity. Here is an example so you can start getting the hang of it!

# assume X is a random variable with mean of c(2.2, 4.1)

exp_X = c(2.2,4.1) # expected value of random MVN variable

vcv_X = matrix(c(1,.2,.2,1.5),nrow=2) # vcv matrix of X

# imagine we want to evaluate a transformation of X: 1/(x1^2 + log(x2))

myfun = function(x) 1/(x[1]^2+log(x[2])) # write a function for your transformation

# compute the gradient of the transformation with respect to the components of X,

# evaluated at the mean values of X

f_prime_X = numDeriv::grad(myfun,exp_X)

# apply the delta method to obtain an approximate standard error:

var_fX = (t(f_prime_X) %*% vcv_X %*% f_prime_X)[1,1]

# compute the standard error:

se_fX = sqrt(var_fX)

# compute the critical value for 95% CI

z_crit = qnorm(0.025,lower=F)

# compute confidence interval

ci_fX = myfun(exp_X) + z_crit*se_fX * c(mean=0,lower=-1,upper=1)

ci_fX## mean lower upper

## 0.15997474 -0.06366178 0.38361125Note that Bolker’s ‘emdbook’ package has a ‘deltavar()’ function for computing variances using the delta method. You are welcome to use Bolker’s function, but I encourage you to apply the delta method ‘from scratch’ using the ‘numDeriv’ package to compute gradients.

As extra credit, see if you can compute and visualize a 95% prediction interval. You can do this by applying the delta method to compute the ‘shape’ parameter of the gamma distribution, which is a derived parameter involving all three parameters you estimated in this problem (a, b, and rate).

NOTE: you will need to apply the delta method for every prediction you make- that is, as you vary the number of days since exposure, the variance of the predictions will change.

- input:

- mle = named vector containing the maximum likelihood values for the params (length 3: a and b params from the Ricker model [see below], and the rate parameter of the Gamma distribution)(names should be “a”,“b”, and “rate”)

- H = the hessian matrix obtained from optimizing the negative-log-likelihood function (i.e., from ‘optim()’ with ‘hessian=T’)

- prediction = a logical T/F indicating whether to generate a prediction interval (if TRUE) or a confidence interval (if FALSE). Set this to FALSE as a default. Since this is an optional problem, you don’t need to incorporate this argument in your algorithm, but please include it anyway!

- suggested algorithm:

- compute variance-covariance matrix (VCM) from the hessian matrix as described above.

- make a data frame with a column “x” spanning the range of the time-since-infection (predictor variable, in days).

- add a column with the predictions from the Ricker model based on the MLE parameters (just the Ricker parameters) across the range of times since exposure.

- use the delta method to compute the standard error for all of your predictions, evaluated at the MLE

- Finally, visualize prediction intervals around your MLE line.

- if prediction=FALSE, then sample many times from a multivariate normal distribution with mean at the MLE and VCM equal to the VCM computed above. For each sampled parameter vector, add the appropriate noise (here, gamma distributed).

- summarize the upper and lower bound for each

- compute the margin of error by multiplying the standard error of each parameter by the appropriate quantile of the z distribution (standard normal). In this case you want the 97.5% quantile (make sure you know why!).

- return:

- a matrix: each row is a parameter (in order: a, b, and rate) and there are three columns: first, the mle values, second, the lower bound of the confidence/prediction interval, and third, the upper bound of the confidence/prediction interval.

You can test your function code using something like this:

predict_myxRicker2(MLE,H,prediction = T) # test the function

## x mean lwr upr

## 1 1.000000 2.938143 2.120906 3.886493

## 2 1.090909 3.156828 2.307206 4.137580

## 3 1.181818 3.368237 2.488359 4.379257

## 4 1.272727 3.572538 2.664315 4.611914

## 5 1.363636 3.769898 2.835055 4.835904

## 6 1.454545 3.960480 3.000586 5.051546

## 7 1.545455 4.144444 3.160936 5.259131

## 8 1.636364 4.321947 3.316148 5.458932

## 9 1.727273 4.493141 3.466273 5.651200

## 10 1.818182 4.658177 3.611376 5.836174

## 11 1.909091 4.817201 3.751526 6.014078

## 12 2.000000 4.970358 3.886798 6.185125

## 13 2.090909 5.117789 4.017271 6.349517

## 14 2.181818 5.259632 4.143028 6.507449

## 15 2.272727 5.396021 4.264154 6.659105

## 16 2.363636 5.527089 4.380735 6.804663

## 17 2.454545 5.652967 4.492861 6.944295

## 18 2.545455 5.773780 4.600619 7.078166

## 19 2.636364 5.889653 4.704099 7.206434

## 20 2.727273 6.000708 4.803390 7.329255

## 21 2.818182 6.107064 4.898582 7.446778

## 22 2.909091 6.208838 4.989762 7.559145

## 23 3.000000 6.306143 5.077021 7.666498

## 24 3.090909 6.399091 5.160445 7.768973

## 25 3.181818 6.487793 5.240122 7.866700

## 26 3.272727 6.572354 5.316137 7.959809

## 27 3.363636 6.652880 5.388576 8.048424

## 28 3.454545 6.729474 5.457522 8.132666

## 29 3.545455 6.802235 5.523060 8.212652

## 30 3.636364 6.871263 5.585270 8.288499

## 31 3.727273 6.936653 5.644233 8.360316

## 32 3.818182 6.998499 5.700028 8.428214

## 33 3.909091 7.056894 5.752735 8.492299

## 34 4.000000 7.111928 5.802429 8.552673

## 35 4.090909 7.163689 5.849187 8.609438

## 36 4.181818 7.212263 5.893083 8.662691

## 37 4.272727 7.257736 5.934189 8.712530

## 38 4.363636 7.300188 5.972578 8.759046

## 39 4.454545 7.339702 6.008320 8.802332

## 40 4.545455 7.376356 6.041485 8.842476

## 41 4.636364 7.410228 6.072140 8.879566

## 42 4.727273 7.441394 6.100352 8.913685

## 43 4.818182 7.469927 6.126187 8.944916

## 44 4.909091 7.495899 6.149708 8.973342

## 45 5.000000 7.519383 6.170978 8.999039

## 46 5.090909 7.540447 6.190060 9.022085

## 47 5.181818 7.559158 6.207013 9.042555

## 48 5.272727 7.575584 6.221896 9.060523

## 49 5.363636 7.589788 6.234769 9.076059

## 50 5.454545 7.601835 6.245687 9.089235

## 51 5.545455 7.611785 6.254706 9.100117

## 52 5.636364 7.619701 6.261880 9.108773

## 53 5.727273 7.625640 6.267264 9.115268

## 54 5.818182 7.629661 6.270909 9.119666

## 55 5.909091 7.631821 6.272867 9.122027

## 56 6.000000 7.632174 6.273188 9.122414

## 57 6.090909 7.630776 6.271919 9.120884

## 58 6.181818 7.627678 6.269111 9.117496

## 59 6.272727 7.622932 6.264809 9.112307

## 60 6.363636 7.616589 6.259059 9.105370

## 61 6.454545 7.608698 6.251907 9.096741

## 62 6.545455 7.599308 6.243396 9.086471

## 63 6.636364 7.588465 6.233569 9.074612

## 64 6.727273 7.576215 6.222469 9.061214

## 65 6.818182 7.562605 6.210135 9.046325

## 66 6.909091 7.547676 6.196609 9.029994

## 67 7.000000 7.531473 6.181930 9.012267

## 68 7.090909 7.514037 6.166136 8.993189

## 69 7.181818 7.495409 6.149264 8.972805

## 70 7.272727 7.475629 6.131351 8.951158

## 71 7.363636 7.454737 6.112433 8.928291

## 72 7.454545 7.432770 6.092545 8.904244

## 73 7.545455 7.409765 6.071721 8.879059

## 74 7.636364 7.385760 6.049995 8.852774

## 75 7.727273 7.360789 6.027399 8.825428

## 76 7.818182 7.334887 6.003964 8.797058

## 77 7.909091 7.308088 5.979723 8.767701

## 78 8.000000 7.280424 5.954705 8.737392

## 79 8.090909 7.251929 5.928939 8.706167

## 80 8.181818 7.222634 5.902456 8.674059

## 81 8.272727 7.192568 5.875283 8.641101

## 82 8.363636 7.161763 5.847447 8.607326

## 83 8.454545 7.130248 5.818976 8.572766

## 84 8.545455 7.098050 5.789896 8.537450

## 85 8.636364 7.065198 5.760232 8.501409

## 86 8.727273 7.031719 5.730009 8.464673

## 87 8.818182 6.997639 5.699252 8.427270

## 88 8.909091 6.962984 5.667984 8.389227

## 89 9.000000 6.927779 5.636230 8.350572

## 90 9.090909 6.892049 5.604010 8.311332

## 91 9.181818 6.855818 5.571347 8.271531

## 92 9.272727 6.819109 5.538263 8.231196

## 93 9.363636 6.781944 5.504779 8.190350

## 94 9.454545 6.744346 5.470915 8.149018

## 95 9.545455 6.706336 5.436690 8.107222

## 96 9.636364 6.667936 5.402125 8.064987

## 97 9.727273 6.629166 5.367238 8.022332

## 98 9.818182 6.590045 5.332047 7.979281

## 99 9.909091 6.550593 5.296570 7.935854

## 100 10.000000 6.510830 5.260826 7.892072predict_myxRicker2(MLE,H,prediction = F)

## x mean lwr upr

## 1 1.000000 2.938143 2.664075 3.212210

## 2 1.090909 3.156828 2.867644 3.446013

## 3 1.181818 3.368237 3.065300 3.671174

## 4 1.272727 3.572538 3.257157 3.887919

## 5 1.363636 3.769898 3.443327 4.096469

## 6 1.454545 3.960480 3.623918 4.297042

## 7 1.545455 4.144444 3.799037 4.489851

## 8 1.636364 4.321947 3.968790 4.675103

## 9 1.727273 4.493141 4.133279 4.853003

## 10 1.818182 4.658177 4.292604 5.023749

## 11 1.909091 4.817201 4.446865 5.187538

## 12 2.000000 4.970358 4.596157 5.344560

## 13 2.090909 5.117789 4.740574 5.495004

## 14 2.181818 5.259632 4.880209 5.639054

## 15 2.272727 5.396021 5.015152 5.776890

## 16 2.363636 5.527089 5.145491 5.908688

## 17 2.454545 5.652967 5.271310 6.034624

## 18 2.545455 5.773780 5.392694 6.154866

## 19 2.636364 5.889653 5.509724 6.269582

## 20 2.727273 6.000708 5.622479 6.378938

## 21 2.818182 6.107064 5.731035 6.483093

## 22 2.909091 6.208838 5.835467 6.582208

## 23 3.000000 6.306143 5.935847 6.676438

## 24 3.090909 6.399091 6.032244 6.765938

## 25 3.181818 6.487793 6.124726 6.850860

## 26 3.272727 6.572354 6.213356 6.931352

## 27 3.363636 6.652880 6.298197 7.007564

## 28 3.454545 6.729474 6.379308 7.079639

## 29 3.545455 6.802235 6.456746 7.147724

## 30 3.636364 6.871263 6.530566 7.211960

## 31 3.727273 6.936653 6.600818 7.272488

## 32 3.818182 6.998499 6.667552 7.329447

## 33 3.909091 7.056894 6.730813 7.382976

## 34 4.000000 7.111928 6.790646 7.433210

## 35 4.090909 7.163689 6.847093 7.480286

## 36 4.181818 7.212263 6.900192 7.524335

## 37 4.272727 7.257736 6.949981 7.565490

## 38 4.363636 7.300188 6.996497 7.603879

## 39 4.454545 7.339702 7.039774 7.639630

## 40 4.545455 7.376356 7.079848 7.672865

## 41 4.636364 7.410228 7.116752 7.703704

## 42 4.727273 7.441394 7.150522 7.732265

## 43 4.818182 7.469927 7.181196 7.758657

## 44 4.909091 7.495899 7.208811 7.782988

## 45 5.000000 7.519383 7.233409 7.805357

## 46 5.090909 7.540447 7.255036 7.825857

## 47 5.181818 7.559158 7.273741 7.844576

## 48 5.272727 7.575584 7.289575 7.861592

## 49 5.363636 7.589788 7.302599 7.876978

## 50 5.454545 7.601835 7.312872 7.890797

## 51 5.545455 7.611785 7.320463 7.903108

## 52 5.636364 7.619701 7.325442 7.913960

## 53 5.727273 7.625640 7.327883 7.923398

## 54 5.818182 7.629661 7.327863 7.931460

## 55 5.909091 7.631821 7.325463 7.938179

## 56 6.000000 7.632174 7.320762 7.943586

## 57 6.090909 7.630776 7.313845 7.947707

## 58 6.181818 7.627678 7.304792 7.950563

## 59 6.272727 7.622932 7.293687 7.952177

## 60 6.363636 7.616589 7.280611 7.952567

## 61 6.454545 7.608698 7.265644 7.951752

## 62 6.545455 7.599308 7.248865 7.949750

## 63 6.636364 7.588465 7.230351 7.946579

## 64 6.727273 7.576215 7.210177 7.942254

## 65 6.818182 7.562605 7.188415 7.936794

## 66 6.909091 7.547676 7.165135 7.930217

## 67 7.000000 7.531473 7.140406 7.922539

## 68 7.090909 7.514037 7.114293 7.913780

## 69 7.181818 7.495409 7.086860 7.903958

## 70 7.272727 7.475629 7.058166 7.893093

## 71 7.363636 7.454737 7.028272 7.881202

## 72 7.454545 7.432770 6.997232 7.868308

## 73 7.545455 7.409765 6.965102 7.854429

## 74 7.636364 7.385760 6.931934 7.839586

## 75 7.727273 7.360789 6.897778 7.823799

## 76 7.818182 7.334887 6.862683 7.807091

## 77 7.909091 7.308088 6.826695 7.789480

## 78 8.000000 7.280424 6.789859 7.770990

## 79 8.090909 7.251929 6.752219 7.751640

## 80 8.181818 7.222634 6.713816 7.731452

## 81 8.272727 7.192568 6.674690 7.710447

## 82 8.363636 7.161763 6.634881 7.688646

## 83 8.454545 7.130248 6.594425 7.666071

## 84 8.545455 7.098050 6.553359 7.642741

## 85 8.636364 7.065198 6.511717 7.618679

## 86 8.727273 7.031719 6.469534 7.593904

## 87 8.818182 6.997639 6.426841 7.568437

## 88 8.909091 6.962984 6.383670 7.542298

## 89 9.000000 6.927779 6.340051 7.515507

## 90 9.090909 6.892049 6.296014 7.488085

## 91 9.181818 6.855818 6.251586 7.460050

## 92 9.272727 6.819109 6.206795 7.431422

## 93 9.363636 6.781944 6.161668 7.402220

## 94 9.454545 6.744346 6.116229 7.372463

## 95 9.545455 6.706336 6.070504 7.342169

## 96 9.636364 6.667936 6.024515 7.311357

## 97 9.727273 6.629166 5.978288 7.280044

## 98 9.818182 6.590045 5.931842 7.248248

## 99 9.909091 6.550593 5.885200 7.215987

## 100 10.000000 6.510830 5.838383 7.183277Exercise 4.1c (optional extra credit!): Profile Likelihood confidence interval

In this optional (extra credit) exercise, you will add an option to construct profile likelihood confidence intervals instead of using the normal approximation (using the Hessian matrix, above). parameter using repeated calls to the “optim()” function.

To do this you will need to modify your likelihood functions and “optim()” commands to estimate only the parameters other than the one you are trying to get a CI for (because you’ll be fixing that parameter at many values on either side of the MLE).

Again, you can assume that there is a dataframe in your environment named ‘myxdat’ with columns named ‘days’ and ‘titer’ that contain the data.

Since this is for extra credit, I am not giving you a suggested algorithm here. Take this as a fun challenge! You might start by writing down some psuedocode to think through the problem before you start coding. Try to do it without help from a chatbot. If you do use a chatbot, ask it to help find solutions using base R. This course is about building custom algorithms so it’s kind of cheating if you use higher level functions that “hide” the underlying algorithms.

input:

- mle = named vector containing the maximum likelihood values for the params (length 3: a and b params from the Ricker model [see below], and the rate parameter of the Gamma distribution)(names should be “a”,“b”, and “rate”)

- H = the hessian matrix obtained from optimizing the negative-log-likelihood function (i.e., from ‘optim()’ with ‘hessian=T’)

- nll = the minimum negative-log-likelihood at the MLE

- profile = a logical T/F indicating whether or not to use the profile likelihood method. If not, the function should return confidence intervals using the normal approximation with the hessian matrix.

suggested algorithm:

Up to you!return:

- a matrix: each row is a parameter (in order: a, b, and rate) and there are three columns: first, the mle values, second, the lower bound of the confidence interval, and third, the upper bound of the confidence interval.

Finally, test the function and compare the profile likelihood method with the hessian method:

CI_myxRicker2(MLE,H,MinNLL,profile=T,alpha=0.05)## mle lb ub

## [1,] 3.4736659 3.0942776 3.8948218

## [2,] 0.1674329 0.1467973 0.1880684

## [3,] 14.4231248 8.0497801 21.6346873CI_myxRicker2(MLE,H,MinNLL,profile=F,alpha=0.05)## mle lwr upr

## a 3.4736659 3.084573 3.8627589

## b 0.1674329 0.147273 0.1875927

## rate 14.4231248 6.722942 22.1233077—————End of lab 3————————